Engineering Life: Core Principles of Synthetic Biology Revolutionizing Biomedical Engineering

This article provides a comprehensive exploration of synthetic biology principles and their transformative impact on biomedical engineering.

Engineering Life: Core Principles of Synthetic Biology Revolutionizing Biomedical Engineering

Abstract

This article provides a comprehensive exploration of synthetic biology principles and their transformative impact on biomedical engineering. Tailored for researchers, scientists, and drug development professionals, it systematically examines foundational concepts, from genetic circuit design to synthetic biomaterials. The scope spans methodological applications in therapeutic development, troubleshooting of real-world deployment challenges, and validation through computational modeling and automation. By integrating the latest advances in AI-driven biodesign, automation, and cell-free systems, this review offers a practical framework for developing next-generation biomedical solutions, including living therapeutics, targeted drug delivery platforms, and diagnostic biosensors, while addressing critical optimization and validation requirements for clinical translation.

The Engineering Paradigm: Foundational Principles and Core Concepts of Synthetic Biology

The field of biological engineering has undergone a fundamental transformation, evolving from the precise but limited scope of genetic engineering to the comprehensive, systems-level approach of synthetic biology. While genetic engineering refers to the direct manipulation of an organism's DNA, historically limited to altering one or a few genes at a time, synthetic biology represents an entirely new paradigm founded on engineering principles [1]. This approach enables the design and construction of novel, nucleic-acid-encoded biological parts, devices, systems, and organisms for useful purposes by applying a rigorous Design-Build-Test-Learn framework [1]. The conceptual foundation comes from electrical engineering, where defined components with known structures and behaviors can be systematically assembled, much like capacitors and resistors on a circuit board [1].

This shift has been particularly transformative in biomedical engineering research, where synthetic biology now enables the programming of biological systems for advanced therapeutic applications. Unlike traditional genetic engineering, which modifies existing genetic blueprints, synthetic biology aims to create novel biological functions not found in nature through standardized, modular component design [1]. The advent of powerful gene-editing technologies, particularly CRISPR/Cas9, has accelerated this transition by providing researchers with the ability to quickly and efficiently make wholesale changes to an organism's DNA [1]. This technological revolution has created unprecedented opportunities for engineering biological systems to address complex challenges in drug development, diagnostic tools, and therapeutic interventions.

Core Principles of the Synthetic Biology Approach

The Engineering Framework for Biological Systems

Synthetic biology is distinguished from traditional genetic engineering by its foundational commitment to engineering principles. The field operates on a cyclic framework of Design, Build, Test, and Learn that enables iterative refinement of biological systems [1]. In the design phase, biological components are specified with standardized characteristics and interfaces. The build phase implements these designs using DNA construction techniques. The test phase rigorously characterizes system performance, and the learn phase extracts principles to inform subsequent design cycles. This systematic approach allows synthetic biologists to treat biological components as predictable, standardized parts that can be assembled into complex systems with defined functions.

The synthetic biology framework enables predictable system behavior through abstraction hierarchies that separate biological complexity into well-defined layers. At the lowest level, DNA parts (promoters, coding sequences, terminators) combine to form devices (logic gates, sensors, actuators), which in turn integrate into systems capable of complex functions. This hierarchical abstraction allows researchers to work at appropriate complexity levels without needing to manage all molecular details simultaneously. The resulting biological systems exhibit programmable functionality that can be directed to perform specific tasks, from environmental sensing to therapeutic production in response to disease biomarkers.

Foundational Technologies Enabling Synthetic Biology

Several key technological advances have enabled the transition from genetic engineering to synthetic biology. The development of high-throughput DNA synthesis and sequencing technologies has dramatically reduced the cost and time required to build and characterize genetic constructs. Automated laboratory platforms allow for parallel construction and testing of thousands of genetic designs, generating the data necessary to inform predictive models. These advances have been complemented by the development of computational tools for designing biological systems, including bioinformatics algorithms, molecular modeling software, and computer-aided design platforms specifically tailored for biological engineering.

The most transformative technology enabling modern synthetic biology has been the CRISPR/Cas9 system, which functions as programmable molecular scissors [1]. Unlike previous gene-editing technologies that required custom protein engineering for each target site, the CRISPR/Cas9 system uses guide RNA molecules to direct DNA cleavage, making genome editing dramatically faster, cheaper, and more precise [1]. This technology has opened up new possibilities for genetic modification, including in biomanufacturing, where comprehensive changes to an organism's DNA can substantially alter which chemical reactions that organism can perform and what it can produce [1]. The CRISPR system has evolved beyond simple gene editing to include advanced applications such as base editing, prime editing, and multiplexed genome regulation, enabling sophisticated genome-scale engineering that defines the synthetic biology approach [2].

Key Methodologies and Experimental Platforms

Computational and AI-Driven Design Tools

The synthetic biology approach leverages advanced computational tools throughout the Design-Build-Test-Learn cycle. Generative Artificial Intelligence has recently transformed enzyme design from structure-centric strategies toward function-oriented paradigms [2]. These emerging computational frameworks now span the entire design pipeline, including active site design, backbone generation, inverse folding, and virtual screening. For instance, density functional theory calculations can define the geometry of key catalytic components to guide the design of active sites (theozymes) that stabilize transition states [2]. Guided by these theozymes, GAI approaches such as diffusion and flow-matching models enable the generation of protein backbones pre-configured for catalysis.

Additional computational methods include inverse folding algorithms such as ProteinMPNN and LigandMPNN, which incorporate atomic-level constraints to optimize sequence-function compatibility [2]. To assess and optimize catalytic performance, virtual screening platforms such as PLACER allow evaluation of protein-ligand conformational dynamics under catalytically relevant conditions [2]. These computational advances are complemented by molecular dynamics simulations and quantum mechanical calculations, which serve as essential tools for investigating enzyme conformational dynamics and reaction mechanisms [2]. Through representative case studies, researchers have demonstrated how GAI-driven frameworks facilitate the rational creation of artificial enzymes with architectures distinct from natural homologs, thereby enabling catalytic activities not observed in nature [2].

Cell-Free Expression Systems for Rapid Prototyping

Ideal cell-free expression systems can theoretically emulate an in vivo cellular environment in a controlled in vitro platform, providing a versatile prototyping environment for synthetic biology [3]. These systems are particularly valuable because they offer a simplified in vitro representation of cellular processes without the complexity of growth and metabolism. The preparation and execution of an efficient endogenous E. coli based transcription-translation cell-free expression system can produce equivalent amounts of protein as T7-based systems at a 98% cost reduction to similar commercial systems [3].

Table 1: Key Components of TX-TL Cell-Free Expression System

| Component | Function | Specification |

|---|---|---|

| Crude Cell Extract | Provides transcriptional and translational machinery | BL21-Rosetta2 strain, 27-30 mg/ml protein concentration [3] |

| Energy Source | Fuels protein synthesis | 3-phosphoglyceric acid (3-PGA) - superior to creatine phosphate and phosphoenolpyruvate [3] |

| Reaction Buffer | Maintains optimal ionic conditions | Mg- and K- glutamate for increased efficiency [3] |

| DNA Template | Encodes desired genetic circuit | Compatible with both endogenous and T7 promoters [3] |

The entire protocol takes five days to prepare and yields enough material for up to 3000 single reactions in one preparation [3]. Once prepared, each reaction takes under 8 hours from setup to data collection and analysis. Mechanisms of regulation and transcription exogenous to E. coli, such as lac/tet repressors and T7 RNA polymerase, can be supplemented, while endogenous properties such as mRNA and DNA degradation rates can also be adjusted [3]. The resulting system has unique applications in synthetic biology as a prototyping environment or "TX-TL biomolecular breadboard" that allows for rapid testing of genetic designs before implementation in living cells [3].

Advanced Genome Editing Platforms

Modern synthetic biology leverages sophisticated multiplex genome editing platforms that enable coordinated modification of multiple genetic loci simultaneously. This approach is emerging as an ideal method for trait stacking to improve crops, functional genomics, and complex metabolic engineering in various biological systems [2]. The engineering and optimization of the latest CRISPR effectors for scalable and precise multiplex editing includes well-known systems like Cas9 and Cas12 variants, as well as newer, smaller variants such as CasMINI, Cas12j2, and Cas12k that offer advantages for delivery and packaging [2].

Table 2: CRISPR Systems for Multiplexed Genome Engineering

| CRISPR System | Editing Type | Key Applications |

|---|---|---|

| Cas9 | DSB, Nickase | Gene knockouts, large deletions |

| Cas12 Variants | DSB, Nickase | Multiplex editing, diagnostics |

| Base Editors | Chemical conversion | Point mutations without DSBs |

| Prime Editors | Reverse transcription | Precise insertions, deletions, substitutions |

| CasMINI | DSB | Delivery-constrained applications |

Central to any multiplexing approach are the expression and processing strategies of crRNA arrays, which include tRNA-based and ribozyme-mediated methods, synthetic modular designs, and AI-optimized guide RNAs tailored to diverse systems [2]. These editing technologies are complemented by next-generation delivery platforms such as lipid nanoparticles, virus-like particles, and metal-organic frameworks that overcome conventional barriers in in vivo applications [2]. Together, these advances enable precise, high-throughput, and programmable multiplex genome editing across biological systems, setting the foundation for future innovations in synthetic biology, crop improvement, and therapeutic intervention in multigene diseases [2].

Applications in Biomedical Engineering

Immunotherapy and Immune Cell Engineering

The application of synthetic biology principles to the immune system presents new mechanism-based avenues for reengineering immune responses, enabling precise control over temporally encoded cell-cell interactions, performing state-specific modulation of gene expression, and recording and responding to cellular experiences over time via programmable effector functions [4]. These capabilities represent major pillars for achieving the vision of modulating immune cell tropism, evading immune detection by engineered cells, and developing next-generation cell-based immunotherapies [4]. These advances are pivotal for tackling a large number of diseases and processes where the immune system plays a pivotal role in maintaining or disrupting organ homeostasis, from cancer to neurodegeneration to fibrosis and senescence [4].

Specific applications in immunological synthetic biology include engineering immune cells with enhanced specificity, functionality, and controllability, including improved sensing, homing, and effector capabilities [4]. Researchers are designing and developing synthetic bio-circuits to direct cell behavior, such as controlling immune cell tropism or tissue localization, supporting in situ detection of complex, multi-ligand patterns for the detection of emerging or pre-symptomatic diseases, and regulating immune cell states and responses in relation to T cell exhaustion, maintenance of self-tolerance, or T cell activation thresholds [4]. Additional approaches focus on constructing artificial or semi-synthetic immune systems for disease modeling, mechanistic discovery, and therapeutic screening, as well as developing modular systems for immune surveillance and non-invasive reporting, allowing engineered cells to communicate what they've sensed or responded to in real time [4].

Sustainable Bioproduction and Biomaterials

Synthetic biology enables the engineering of microbial platforms for sustainable bioproduction of valuable compounds. For instance, Bacillus methanolicus MGA3 is a methylotrophic bacterium with high potential as a production host in the bioeconomy, particularly with methanol as a feedstock [2]. Recent acceleration in strain engineering technologies through advances in transformation efficiency, the development of CRISPR/Cas9-based genome editing, and the application of genome-scale models for strain design have broadened the biotechnological potential of this thermophilic methylotroph [2]. With its expanding product portfolio, B. methanolicus demonstrates its potential as a microbial cell factory for the production of tricarboxylic acid cycle and ribulose monophosphate cycle intermediates and their derivatives [2].

In the realm of biomaterials, synthetic biology approaches are being applied to produce recombinant collagen that avoids the risks of pathogen transmission associated with animal-derived collagen [2]. Advances in AI-assisted protein engineering are accelerating the design of synthetic collagens and their applications in biomaterials [2]. These engineered biomaterials have crucial applications in biomedical fields such as drug delivery systems, cell culture matrices, and tissue engineering scaffolds [2]. The integration of computational design with biological production systems represents a hallmark of the synthetic biology approach to biomaterial development.

Essential Research Reagent Solutions

The implementation of synthetic biology methodologies requires specialized research reagents and tools. The following table summarizes key solutions essential for experimental work in this field.

Table 3: Essential Research Reagent Solutions for Synthetic Biology

| Category | Specific Solution | Research Application |

|---|---|---|

| Genome Editing | CRISPR-Cas9 systems [1], Base editors [2], Prime editors [2] | Targeted gene knock-out, knock-in, and precise sequence alteration |

| Delivery Platforms | Lipid nanoparticles [2], Virus-like particles [2], Metal-organic frameworks [2] | Efficient in vivo delivery of genetic constructs |

| Cell-Free Systems | E. coli TX-TL system [3], 3-PGA energy source [3] | Rapid prototyping of genetic circuits without cellular constraints |

| Computational Design | ProteinMPNN [2], PLACER [2], Molecular dynamics simulations [2] | In silico design and optimization of biological parts and systems |

| Model Organisms | Bacillus methanolicus [2], Escherichia coli [3] | Engineered microbial hosts for bioproduction and circuit implementation |

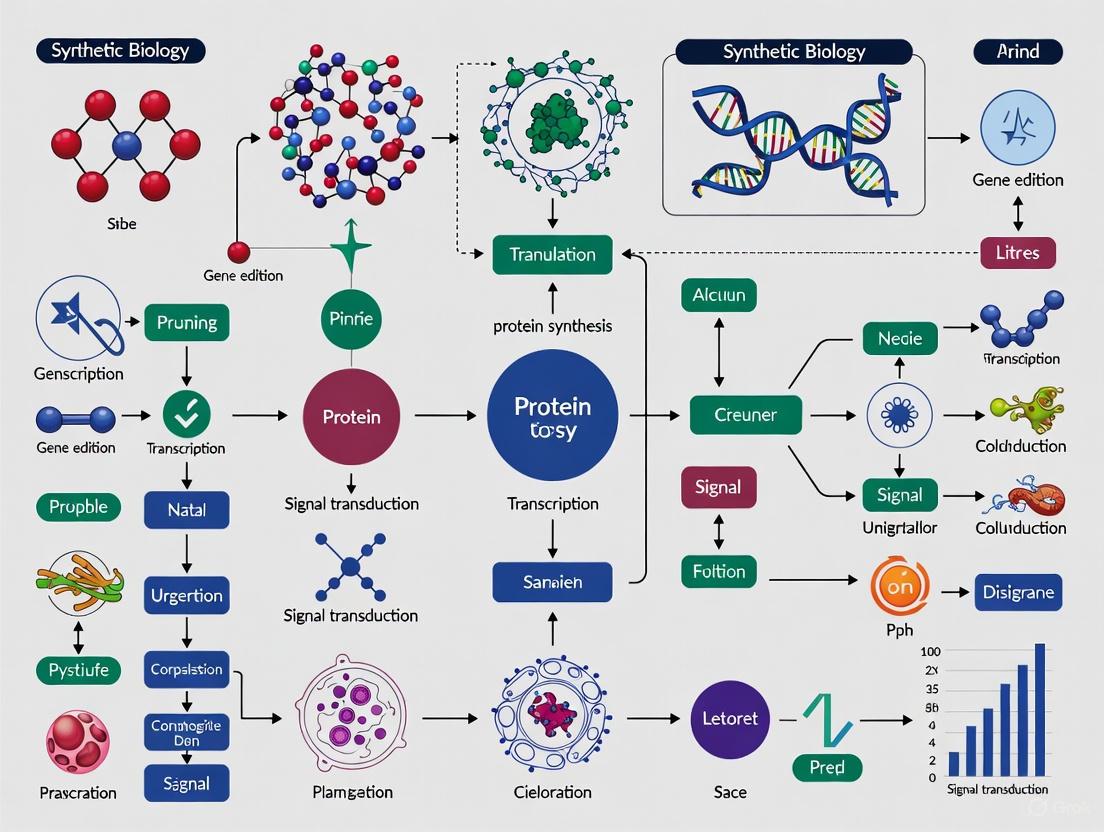

Visualizing Synthetic Biology Workflows

Design-Build-Test-Learn Cycle

The core engineering framework of synthetic biology follows an iterative Design-Build-Test-Learn cycle that enables continuous refinement of biological systems.

CRISPR-Mediated Multiplex Genome Editing

Modern synthetic biology utilizes advanced CRISPR systems for coordinated editing of multiple genetic loci.

Cell-Free Transcription-Translation System

Cell-free expression platforms provide a controlled environment for prototyping genetic circuits.

The transition from genetic engineering to biological systems engineering represents a fundamental shift in how we approach biological design. Synthetic biology has established itself as a distinct discipline characterized by its engineering-based framework, standardized biological parts, and systematic design principles. As the field continues to mature, several emerging trends are likely to shape its future development. The integration of artificial intelligence and machine learning throughout the Design-Build-Test-Learn cycle will accelerate the design of biological systems with increasingly complex functions [2]. The expansion of cell-free expression systems as prototyping platforms will enable more rapid characterization and optimization of genetic circuits before implementation in living cells [3]. Additionally, advances in multiplex genome editing will facilitate engineering of complex traits and metabolic pathways for therapeutic and industrial applications [2].

For biomedical engineering research, synthetic biology offers unprecedented opportunities to program biological systems for improved healthcare outcomes. The application of synthetic biology principles to immunology is already yielding novel approaches to disease detection and treatment [4]. As these technologies continue to develop, they will enable increasingly sophisticated therapeutic strategies, from engineered immune cells for cancer treatment to synthetic genetic circuits for managing metabolic diseases. The ongoing refinement of the synthetic biology toolkit—including more precise genome editors, improved delivery vehicles, and better computational models—will further enhance our ability to design and implement biological systems that address complex challenges in medicine and biotechnology. Through the continued application of engineering principles to biological design, synthetic biology promises to transform how we understand, manipulate, and ultimately utilize biological systems for human benefit.

Synthetic biology represents an interdisciplinary field that applies core engineering principles to biological systems, enabling the design and construction of new biological entities or the modification of existing ones [5]. This engineering-based approach aims to transform biology from a purely investigative science into a predictable engineering discipline, facilitating the rational design of biological systems with predefined and reliable functions. The foundational principles of standardization, modularity, and abstraction form the cornerstone of this paradigm shift, providing the necessary framework for managing biological complexity and accelerating the development of innovative solutions in biomedical engineering and therapeutic development [6].

These principles directly address the inherent complexity of biological systems, which has traditionally hindered predictable engineering outcomes. Standardization establishes universal measurement and assembly techniques, modularity enables the decomposition of complex systems into functional units, and abstraction creates hierarchical design layers that allow engineers to focus on specific system levels without being overwhelmed by underlying biological details [7]. The integration of these principles has created a powerful foundation for advancing biomedical applications, including engineered cellular therapies, diagnostic biosensors, and sustainable biomanufacturing platforms [8]. This whitepaper examines the technical implementation, experimental validation, and practical application of these core principles in synthetic biology research for biomedical contexts.

The Principle of Standardization

Standardization in synthetic biology establishes consistent frameworks for quantifying, assembling, and characterizing biological components. This principle is fundamental for enabling reproducible research, facilitating collaboration across laboratories, and creating predictable design workflows essential for biomedical applications [6].

Quantitative Measurement Standards

A critical aspect of standardization involves developing universal measurement units for biological components. The Relative Promoter Unit (RPU) system exemplifies this approach by quantifying promoter activity relative to a standardized reference promoter measured under identical experimental conditions [6]. This methodology enables meaningful comparisons of genetic part performance across different laboratories and experimental setups. Experimental data demonstrates that when properly implemented, the RPU approach maintains reasonable consistency, with promoters characterized via different measurement systems showing an average coefficient of variation (CV) of just 9% among technical replicates [6].

The experimental protocol for RPU measurement involves transforming a standardized genetic construct containing the test promoter into an appropriate host strain (commonly E. coli TOP10), growing cultures in defined media under specific conditions (typically 37°C with aeration), and measuring reporter gene output (e.g., fluorescence) during exponential growth phase using plate readers or flow cytometry. Data normalization is performed against both the reference promoter and appropriate blank controls to ensure accurate relative measurements.

Physical Assembly Standards

Standardized physical assembly methods create consistent techniques for constructing composite genetic systems. BioBrick assembly represents one such standardized framework that enables hierarchical construction of genetic devices from basic parts using a uniform assembly process [6]. This physical standardization allows researchers to share components and reliably combine them into larger functional systems. The table below summarizes key standardization frameworks in synthetic biology:

Table 1: Standardization Frameworks in Biological Design

| Standard Type | Implementation | Application | Experimental Consideration |

|---|---|---|---|

| Measurement Standard | Relative Promoter Units (RPU) | Promoter characterization | Requires reference promoter & controlled growth conditions |

| Physical Assembly | BioBrick assembly | Hierarchical DNA construction | Uses standardized prefix/suffix sequences |

| Data Exchange | Systems Biology Markup Language (SBML) | Computational model sharing | Ensures model reproducibility & interoperability |

| Functional Characterization | Standard biological parts registry | Part performance documentation | Must specify measurement conditions & host chassis |

The Principle of Modularity

Modularity in synthetic biology enables the creation of complex biological systems through the composition of functionally self-contained units or modules [6]. This principle allows engineers to design systems using well-characterized components whose behavior remains predictable when interconnected, mirroring the modular design approaches that have proven successful in other engineering disciplines.

Experimental Assessment of Modularity

Rigorous experimental studies have quantified the challenges and limitations of biological modularity. Research examining promoter activity when characterized via different biological measurement systems (varying plasmids, reporter genes, and ribosome binding sites) revealed that while most promoters showed consistent activity, some exhibited statistical differences (P<0.05) with coefficients of variation up to 22% across measurement systems [6]. This highlights the context-dependent nature of biological components and the importance of standardized measurement conditions.

Further investigations into modularity limitations tested promoter activity variation when independent expression modules were physically combined in the same system. Experiments constructing composite systems with two expression cassettes (GFP and RFP reporters) demonstrated that promoter activity could vary significantly (up to 35% CV) depending on contextual factors such as relative position and flanking sequences [6]. These findings underscore the importance of developing design rules that account for context-dependence when creating modular biological systems.

Functional Interconnection Testing

The modularity of input devices driving a common output device represents a crucial test for biological modularity. Experimental approaches have tested this principle by connecting different input modules (constitutive and regulated promoters) to a fixed output device (a genetic logic inverter) expressing GFP [6]. In a truly modular system, identical transcriptional input signals should produce identical GFP outputs regardless of the specific input device generating the signal. Experimental results revealed significant variability, with output variations up to 44% depending on the specific input device used, highlighting the challenges in achieving perfect modularity in biological systems [6].

Figure 1: Modularity Test Framework for Biological Devices. Different input devices (X1-XN) generating identical transcriptional signals should produce identical outputs when connected to a fixed output device in a truly modular system.

Abstraction creates hierarchical design layers that enable engineers to work at appropriate complexity levels without needing to manage all biological details simultaneously. This principle, borrowed from computer engineering, allows for the encapsulation of biological complexity within well-defined interfaces, dramatically accelerating the design process for sophisticated biological systems [7].

The abstraction hierarchy in synthetic biology typically follows multiple layers, from DNA sequences at the lowest level to full systems at the highest level. At each layer, specific design tools and methodologies facilitate the creation and integration of biological components. The emerging field of Bio-Design Automation (BDA) applies computational tools to streamline this hierarchical design process, creating software solutions that support the specification, design, building, testing, and learning phases of biological engineering [7].

Figure 2: Abstraction Hierarchy in Biological Design. This hierarchy enables engineers to work at appropriate complexity levels, with standardized interfaces between layers.

Bio-Design Automation Tools

Abstraction is implemented through specialized software tools that support biological design at different hierarchy levels. These Bio-Design Automation (BDA) tools create formal computational environments for designing, simulating, and optimizing biological systems [7]. The table below summarizes key BDA tools and their applications in the design workflow:

Table 2: Bio-Design Automation Tools for Abstract Biological Design

| Tool Name | Abstraction Level | Function | Application Example |

|---|---|---|---|

| Cello | Device/System | Compiles Verilog code to DNA sequences | Genetic logic circuit design [7] |

| Eugene | Part/Device | Rule-based specification language | Automated generation of biological devices [7] |

| GenoCAD | Part/Device | Gene design with grammatical rules | Constraint-based biological design [7] |

| Antimony | Device/System | Text-based model definition | Biochemical network modeling & simulation [7] |

| RBS Calculator | Part | Predicts translation initiation rates | Translation efficiency optimization [7] |

Integrated Experimental Framework

The synergistic application of standardization, modularity, and abstraction creates a powerful framework for engineering biological systems. This integrated approach enables the design and construction of sophisticated genetic devices with predictable behaviors, accelerating the development of biomedical solutions.

The Design-Build-Test-Learn Cycle

The core engineering workflow in synthetic biology follows an iterative Design-Build-Test-Learn (DBTL) cycle, where each iteration refines the biological design based on experimental data [7]. This cyclical process depends fundamentally on all three core principles: standardization ensures consistent experimental results, modularity enables the reuse and recombination of components, and abstraction facilitates model-based design and simulation. Advanced platforms like Phoenix now guide users through automated, iterative DBTL cycles, incorporating machine learning to continuously improve designs based on empirical data [7].

Experimental Protocol for Genetic Device Characterization

A standardized experimental protocol for characterizing genetic devices demonstrates the practical integration of all three principles:

Device Design: Create genetic constructs using standardized parts (Standardization) from modular libraries (Modularity) through abstract design tools (Abstraction).

Construct Assembly: Assemble devices using standardized assembly methods (Standardization) such as BioBrick assembly.

Transformation and Culturing: Introduce constructs into host chassis via electroporation or chemical transformation. Grow cultures in defined media (e.g., LB with appropriate antibiotics) at specified temperatures (e.g., 37°C for E. coli) with continuous shaking (250 rpm).

Measurement and Data Collection: Measure reporter outputs (fluorescence, absorbance) during exponential growth phase using plate readers or flow cytometry. Include appropriate controls (empty vector, reference standards).

Data Normalization and Analysis: Normalize data using the RPU framework (Standardization) and compare to model predictions (Abstraction). Evaluate device performance across different contexts (Modularity).

This integrated approach enables rigorous characterization of genetic devices while facilitating comparison and reuse across different research contexts.

Research Reagent Solutions

The practical implementation of synthetic biology principles requires specialized research reagents and tools. The following table details essential materials and their functions in biological design experiments:

Table 3: Essential Research Reagents for Biological Design Experiments

| Reagent/Category | Function | Example Implementation |

|---|---|---|

| Standard Biological Parts | Basic functional units for genetic construction | BioBrick parts in Registry of Standard Biological Parts [6] |

| Standardized Vectors | DNA backbones for part assembly | Plasmid systems with standardized prefixes/suffixes [6] |

| Reporter Proteins | Quantitative measurement of part activity | GFP, RFP with standardized measurement protocols [6] |

| Genome Engineering Tools | Precise genetic modification | CRISPR-Cas9, TALENs, ZFNs for chassis engineering [5] |

| Host Chassis | Cellular environment for device operation | E. coli strains (TOP10, KRX) with characterized behavior [6] |

| Characterization Tools | Quantitative measurement of system performance | Flow cytometers, plate readers, RPU measurement systems [6] |

Biomedical Applications and Future Directions

The application of standardization, modularity, and abstraction principles enables transformative biomedical applications, including engineered cellular therapies, diagnostic biosensors, and programmable living medicines [8]. These principles facilitate the development of sophisticated biological systems that can detect disease states, produce therapeutic responses, and interface with human physiology in precisely controlled ways.

Emerging trends include the development of virtual cells or digital cellular twins that create integrative computational models of cellular processes [9]. These models represent the ultimate expression of abstraction in biological design, enabling in silico prediction of cellular behavior before physical implementation. The integration of artificial intelligence with synthetic biology further enhances these capabilities, allowing for more accurate predictions and optimized designs [10].

As synthetic biology continues to mature, the core principles of standardization, modularity, and abstraction will remain fundamental to advancing biomedical engineering research. Through their consistent application, researchers can develop increasingly sophisticated biological systems that address complex challenges in therapeutics, diagnostics, and sustainable biomedicine.

The engineering of biological systems relies on a foundational hierarchy that organizes biological functionality into manageable levels of complexity: Parts, Devices, and Systems [11]. This structured framework allows researchers to apply engineering principles to biology, enabling the predictable design and construction of new biological functions. In the context of biomedical engineering, this hierarchy provides the toolbox for programming cells to perform therapeutic tasks, diagnose diseases, and produce valuable pharmaceuticals. Synthetic biology merges biology, engineering, and computer science to modify and create living systems, developing novel biological functions served by amino acids, proteins, and cells not found in nature [11]. This field creates reusable biological "parts," streamlining design processes and reducing the need to start from scratch, thus advancing biotechnology's capabilities and efficiency for biomedical applications [11].

Biological Parts: The Fundamental Building Blocks

Biological parts are the most basic functional units in synthetic biology. These standardized DNA-encoded components perform discrete biological functions and can be combined to form more complex structures.

- Promoters: DNA sequences where RNA polymerase binds to initiate transcription. Engineered promoters allow precise control over the timing and level of gene expression. In endogenous E. coli TX-TL systems, sigma70-based promoters with phage operators enable regulated expression [3].

- Protein Coding Sequences: DNA sequences that encode proteins, which can be endogenous or heterologous. These can be optimized for expression in different chassis organisms. For example, the pBEST-OR2-OR1-Pr-UTR1-deGFP-T500 plasmid expresses deGFP and can produce up to 0.75 mg/ml of reporter protein in cell-free systems [3].

- Ribosome Binding Sites (RBS): Sequences that facilitate the binding of ribosomes to mRNA, controlling translation initiation rates. RBS engineering is crucial for optimizing protein expression levels.

- Terminators: DNA sequences that signal the end of transcription, preventing read-through and ensuring proper transcription termination.

- Aptamers: Short nucleic acid sequences that bind specific ligands, providing a modular strategy for transcriptional regulation. When combined with switchable transcription terminators (SWTs), aptamers create ligand-responsive systems with improved precision and dynamic range [2].

Table 1: Characterization of Core Biological Parts

| Part Type | Primary Function | Key Parameters | Example Applications |

|---|---|---|---|

| Promoter | Transcription initiation | Strength, inducibility, specificity | Sigma70-based promoters for endogenous expression [3] |

| RBS | Translation initiation | Efficiency, strength | Optimizing protein expression levels |

| Aptamer | Ligand binding | Affinity, specificity | Ligand-responsive transcriptional regulation [2] |

| Terminator | Transcription termination | Efficiency | Switchable Transcription Terminators (SWTs) [2] |

| Protein Coding Sequence | Protein specification | Codon usage, stability | deGFP reporter (pBEST plasmid) [3] |

Devices: Functional Units for Biological Circuitry

Devices are functional assemblies of multiple biological parts that perform defined operations. They process inputs and generate outputs, creating basic logical functions within cells.

- Logic Gates: Genetic circuits that perform Boolean operations. SWTs have been used to build biological logic gates in vitro, including AND, NOT, NAND, and NOR gates [2]. These gates enable decision-making capabilities in engineered cells.

- Switchable Systems: Regulatory systems that toggle between states. The combination of aptamers with SWTs creates heterologous input logic gates that improve transcription activation by up to 3.3-fold for SWT and 7.84-fold for aptamer regulation compared to their individual performance [2].

- Sensors: Devices that detect environmental or intracellular signals. Quorum sensing (QS) systems, characterized by pathway-independence and autonomous control, can be engineered for population-level sensing and coordination in microbial communities [2].

- Expression Controllers: Devices that regulate gene expression output. Modular transcriptional regulation systems using SWTs and aptamers provide flexible and programmable strategies for fine-tuning gene expression [2].

The development of CRISPR-based technologies has transformed device engineering by enabling programmable regulation. Engineering and optimization of the latest CRISPR effectors, including well-known systems like Cas9 and Cas12 variants, to smaller variants such as CasMINI, Cas12j2, and Cas12k, have expanded the toolbox for creating sophisticated genetic devices [2].

Systems: Integrated Networks for Complex Functions

Systems represent the highest level of the hierarchy, integrating multiple devices to execute complex, coordinated behaviors with applications in therapeutic, diagnostic, and biomanufacturing contexts.

- Metabolic Pathways: Engineered systems for bioproduction. Bacillus methanolicus MGA3 has been engineered as a versatile thermophilic platform for sustainable bioproduction from methanol and alternative feedstocks [2]. The central carbon metabolism of B. methanolicus has been harnessed for various native and non-native carbon sources, expanding its product portfolio to include tricarboxylic acid (TCA) cycle and ribulose monophosphate (RuMP) cycle intermediates and their derivatives [2].

- Therapeutic Production Systems: Engineered organisms for pharmaceutical synthesis. Distributed biomanufacturing offers unprecedented production flexibility in both location and timing, enabling swift responses to sudden demands like disease outbreaks requiring specific medications [11].

- Biosensing Systems: Integrated circuits for diagnostic applications. The combination of aptamers with switchable transcription terminators creates modular, ligand-responsive systems with applications in biosensing and nucleic acid therapy [2].

- Electrocatalytic-Biosynthetic Hybrid Systems: Coupled systems for CO2 fixation. These systems synergize electrocatalytic CO2 reduction (which achieves C1/C2 products with high formation rates) with biosynthesis (which utilizes these C1/C2 species for carbon chain elongation) for efficient CO2 upcycling [2].

Table 2: Representative Synthetic Biology Systems and Applications

| System Type | Components | Function | Research Context |

|---|---|---|---|

| TX-TL Cell-Free System | Endogenous E. coli transcription-translation machinery, energy source, nucleotides | Biomolecular "breadboard" for circuit prototyping [3] | Protein expression, circuit characterization |

| Quorum Sensing System | AHL synthases, receptors, promoter elements | Population-density dependent gene regulation [2] | Coordinated behaviors, population control |

| CRISPR Multiplex Editing | Cas effectors, crRNA arrays, repair templates | Programmable genome editing at multiple loci [2] | Metabolic engineering, functional genomics |

| CO2 Fixation System | CO2-fixing enzymes, regeneration systems | Carbon conversion to valuable chemicals [2] | Sustainable biomanufacturing |

Experimental Protocols for Implementation

Protocol for Endogenous E. coli-Based TX-TL Cell-Free Expression

This protocol creates an efficient endogenous E. coli based transcription-translation (TX-TL) cell-free expression system that preserves native regulatory mechanisms while maintaining high protein expression capability [3].

Day 1: Preparation of Bacterial Culture

- Prepare bacterial culture media, culture plate, and media supplements as described.

- Streak BL21-Rosetta2 strain from -80°C onto a 2xYT+P+Cm agar plate.

- Incubate for at least 15 hours at 37°C or until colonies are visible.

Day 2: Culture Expansion and Reagent Preparation

- Prepare buffers and supplements including S30A buffer.

- Sterilize materials required for day 3.

- Prepare mini-culture 1: Add 4 ml of 2xYT+P media and 4 μl of Cm to a 12 ml sterile culture tube, pre-warm to 37°C for 30 minutes.

- Inoculate mini-culture 1 with a colony from the agar plate, incubate at 220 rpm, 37°C for 8 hours.

- 7.5 hours later, prepare mini-culture 2: Add 50 ml of 2xYT+P media and 50 μl of Cm to a sterile 250 ml Erlenmeyer flask, pre-warm to 37°C for 30 minutes.

- Inoculate mini-culture 2 with 100 μl of mini-culture 1, incubate at 220 rpm, 37°C for 8 hours.

Day 3: Cell Growth and Lysis

- Weigh four empty sterile 50 ml Falcon tubes and chill on ice.

- 7.5 hours after previous step, prepare final bacterial culture media: Transfer 660 ml of 2xYT+P media into each of six 4 L Erlenmeyer flasks, pre-warm to 37°C for 30 minutes.

- Add 6.6 ml of mini-culture 2 into each 4 L Erlenmeyer flask.

- Incubate at 220 rpm, 37°C until culture reaches OD₆₀₀ of 1.5-2.0 (mid-log growth phase).

- Transfer all cultures evenly into four 1 L centrifuge bottles, centrifuge at 5000 × g for 12 minutes at 4°C.

- Complete S30A buffer preparation by adding 4 ml of 1 M DTT to 2 L of previously prepared S30A buffer, maintain on ice.

- Remove supernatant, add 200 ml of S30A buffer at 4°C to each bottle, shake vigorously until pellet is completely solubilized.

- Centrifuge again at 5,000 × g for 12 minutes at 4°C.

- Remove supernatant, add 200 ml of S30A buffer, repeat centrifugation.

- After final centrifugation, estimate pellet weight and add 0.9 ml of S30A buffer per gram of pellet.

- Transfer suspension to bead-beater chamber, add 100 g of 0.1 mm glass beads.

- Lyse cells by bead-beating for 3 minutes, with 30-second intervals to prevent overheating.

- Centrifuge lysate at 12,000 × g for 10 minutes at 4°C.

- Transfer supernatant to fresh tubes, centrifuge again at 12,000 × g for 10 minutes at 4°C.

Day 4: Extract Clarification and Dialysis

- Transfer supernatant (crude extract) to dialysis cassettes.

- Dialyze against 2 L of S30B buffer for 3 hours at 4°C with stirring.

- Transfer dialyzed extract to fresh tubes, centrifuge at 12,000 × g for 10 minutes at 4°C.

- Aliquot supernatant, flash-freeze in liquid nitrogen, store at -80°C.

Day 5: TX-TL Reaction Assembly

- Prepare TX-TL reaction master mix on ice.

- For each 10 μl reaction: 4.5 μl crude cell extract, 1.5 μl of 10× reporter DNA (10-20 nM), 4 μl of reaction mix.

- Incubate reaction at 29°C for 8-14 hours, monitoring protein expression.

- The entire protocol yields enough material for approximately 3,000 single reactions with material costs of approximately $0.11 per 10 μl reaction [3].

Protocol for Engineering Bacillus methanolicus

The methylotrophic bacterium Bacillus methanolicus MGA3 represents a versatile thermophilic platform for sustainable bioproduction [2].

Genetic Tool Development:

- Implement advanced transformation protocols optimized for this thermophilic methylotroph.

- Apply CRISPR/Cas9-based genome editing systems specifically adapted for B. methanolicus.

- Utilize genome-scale models (GSMs) for informed strain design.

Metabolic Engineering:

- Engineer central carbon metabolism for expanded substrate utilization including methanol, mannitol, arabitol, glucose, starch, and xylose.

- Modify metabolic pathways for production of target compounds: TCA cycle intermediates, RuMP cycle intermediates, and their derivatives.

- Optimize expression of heterologous proteins leveraging the organism's capacity for producing thermostable proteins.

Cultivation and Characterization:

- Cultivate engineered strains under controlled conditions with temperature optimization for thermophilic growth.

- Monitor biomass accumulation and product formation.

- Analyze metabolic fluxes and pathway performance using appropriate analytical methods.

Visualization of Synthetic Biology Workflows

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 3: Essential Research Reagents for Synthetic Biology

| Reagent/Material | Function | Example Application | Technical Notes |

|---|---|---|---|

| BL21-Rosetta2 E. coli Strain | Protein expression host with rare tRNA supplementation | Cell-free extract preparation for TX-TL systems [3] | Contains plasmid encoding rare tRNAs; selected with chloramphenicol |

| S30A and S30B Buffers | Cell lysis and dialysis buffers | Crude cell extract preparation and dialysis [3] | Contains Tris-acetate, magnesium and potassium glutamate, DTT |

| 2xYT+P Media | Rich bacterial growth medium | Culture growth for cell extract preparation [3] | Contains yeast extract, tryptone, NaCl, phosphate buffer |

| 3-Phosphoglyceric Acid (3-PGA) | Energy source for cell-free reactions | ATP regeneration in TX-TL systems [3] | Superior protein yields compared to creatine phosphate and phosphoenolpyruvate |

| Mg-/K-Glutamate | Salt components in reaction buffer | Enhancing efficiency in TX-TL systems [3] | Replacement for Mg-/K-acetate in previous protocols |

| Switchable Transcription Terminators (SWTs) | Programmable transcriptional regulation | Construction of logic gates and regulatory circuits [2] | Low leakage expression, high ON/OFF ratios |

| Aptamers | Ligand-binding nucleic acid elements | Modular transcriptional regulation [2] | Can be combined with SWTs for improved regulation |

| CRISPR Effectors (Cas9, Cas12 variants) | Genome editing tools | Multiplex genome editing, metabolic engineering [2] | Includes newer, smaller variants (CasMINI, Cas12j2, Cas12k) |

| Plasmid DNA Templates | Genetic information carriers | Protein expression in cell-free and cellular systems [3] | e.g., pBEST-OR2-OR1-Pr-UTR1-deGFP-T500 for deGFP expression |

Future Directions and Concluding Remarks

The field of synthetic biology continues to evolve with several emerging trends shaping its future. Generative Artificial Intelligence (GAI) is transforming enzyme design from structure-centric strategies toward function-oriented paradigms [2]. Computational frameworks now span the entire design pipeline, including active site design, backbone generation, inverse folding, and virtual screening. GAI approaches such as diffusion and flow-matching models enable the generation of protein backbones pre-configured for catalysis, while inverse folding methods incorporate atomic-level constraints to optimize sequence-function compatibility [2].

Multiplex genome editing technologies are advancing rapidly, enabling precise, high-throughput editing across biological systems [2]. The development of base editors and prime editors allows efficient editing across multiple loci without double-strand breaks, setting the foundation for future innovations in synthetic biology, crop improvement, and therapeutic intervention in multigene diseases [2].

The integration of electrocatalysis and biotransformation represents another promising direction for CO2-based biomanufacturing [2]. These hybrid systems synergize the advantages of electrocatalytic CO2 reduction (which achieves C1/C2 products with high formation rates) with biosynthesis (which utilizes these C1/C2 species for carbon chain elongation) for efficient CO2 upcycling [2].

As synthetic biology matures, the parts-devices-systems hierarchy continues to provide a foundational framework for engineering biology. The ongoing development of standardized biological components, coupled with advanced computational tools and experimental methodologies, promises to accelerate the design-build-test-learn cycle and expand the applications of synthetic biology in biomedical research and therapeutic development.

Synthetic biology has emerged as a formal engineering discipline that utilizes concepts from genetics, biophysics, and molecular biology to repurpose natural biological systems for applications in biomedicine and biotechnology [12] [13]. At the core of this field lie genetic circuits—sophisticated assemblies of biological components that process and manipulate information within living cells to create novel, useful biological functions [14]. These circuits enable researchers to program cells with new capabilities, much like electronic circuits enable computers to perform complex operations. The engineering of these circuits has progressed from simple proof-of-concept designs to complex platforms capable of Boolean logic, memory storage, and oscillatory behavior, with significant implications for therapeutic development, diagnostic tools, and biomanufacturing [15] [13].

The design-build-test-learn cycle represents the fundamental engineering framework in synthetic biology, though it faces unique challenges when applied to biological systems. Unlike conventional engineering substrates, biology presents distinctive challenges stemming from incomplete understanding of natural systems and limitations in manipulation tools [13]. Furthermore, circuit functionality is profoundly influenced by cellular context, including signal noise, metabolic dynamics, and stability considerations [15]. Despite these challenges, synthetic biology is advancing toward rational and high-throughput biological engineering through developing core platforms that span the entire biological design cycle, including DNA construction, parts libraries, computational design tools, and interfaces for manipulating and probing synthetic circuits [13].

This technical guide examines the fundamental principles of three core genetic circuit types—switches, oscillators, and Boolean logic systems—within the context of biomedical engineering research. By providing both theoretical foundations and practical implementation methodologies, we aim to equip researchers and drug development professionals with the knowledge necessary to leverage these powerful tools in their work.

Boolean Logic Systems in Genetic Circuits

Fundamental Principles and Implementation

Boolean logic gates form the computational foundation of genetic circuits, enabling cells to perform decision-making operations based on molecular inputs. These gates process one or more input signals to produce specific outputs according to logical rules, with the NOR gate being particularly significant as any logic function can be implemented through NOR gates alone [15]. In biological terms, inputs typically consist of small molecule inducers or environmental signals, while outputs are often reporter proteins or metabolic changes.

The implementation of Boolean logic in biological systems differs fundamentally from electronic computing. Genetic logic gates operate through biochemical reactions of gene expression that simulate on and off states via protein concentrations, using DNA-binding proteins and transcription factors to express protein concentration for specific logical functions [16]. A typical genetic NOR gate, for instance, might be designed with inputs such as arabinose (Ara) and anhydrotetracycline (aTc), with yellow fluorescent protein (YFP) serving as the output reporter [15]. The gate produces output (YFP expression) only when both inputs are absent, following the NOR truth table.

The mathematical foundation for modeling these systems relies heavily on nonlinear Hill dynamics, which describe the binding of transcription factors to DNA promoter regions. For a genetic NOT gate, the promoter activity function can be described as:

$$f_{\text{NOT}}(u) = \frac{1}{1 + \left(\frac{u}{K}\right)^n}$$

where $u$ represents the concentration of repressor transcription factor, $K$ is the Hill constant, and $n$ is the Hill coefficient representing cooperativity [16]. Similarly, an AND gate with two inputs follows:

$$f{\text{AND}}(u1, u2) = \frac{\left(\frac{u1}{K1}\right)^{n1} \left(\frac{u2}{K2}\right)^{n2}}{1 + \left(\frac{u1}{K1}\right)^{n1} + \left(\frac{u2}{K2}\right)^{n2} + \left(\frac{u1}{K1}\right)^{n1} \left(\frac{u2}{K2}\right)^{n_2}}$$

More complex gates can be constructed through modular combinations. A NAND gate, for example, can be implemented by cascading AND and NOT gates, with the dynamic equation:

$$\begin{aligned} \dot{p}{\text{AND}} &= \alphaP f{\text{AND}}(u1, u2) - \gammaP p{\text{NAND}} + \alpha{P0, \text{AND}}, \ \dot{p}{\text{NOT}} &= \alphaP f{\text{NOT}}(p{\text{AND}}) - \gammaP p{\text{NOT}} + \alpha{P0, \text{NOT}}, \ p{\text{AND}}(u1, u2) &= p_{\text{NOT}} \end{aligned}$$

where $p$ represents protein concentrations, $\alpha$ denotes production rates, and $\gamma$ signifies degradation rates [16].

Advanced Boolean Circuit Designs

Recent research has demonstrated increasingly sophisticated Boolean implementations. A 2019 study successfully constructed 12 circuit logic gate modules in Escherichia coli, including "AND," "NAND," "OR," and "NOR" gates validated through reporter gene expression [17]. These circuits converted inputs into outputs via intermediate products of host metabolism, showcasing the potential for integrating synthetic circuits with native cellular processes.

Plant synthetic biology has also seen significant advances, with researchers establishing a predictive framework for genetic circuit design in both Arabidopsis thaliana and Nicotiana benthamiana [14]. This platform enabled the construction of 21 two-input genetic circuits with various logic functions (14 types) achieving high prediction accuracy (R² = 0.81), demonstrating the scalability of Boolean approaches in complex eukaryotic systems.

Table 1: Performance Characteristics of Genetic Boolean Gates

| Gate Type | Organism | Input Signals | Output Signal | Fold Change | Response Time | Reference |

|---|---|---|---|---|---|---|

| NOR | E. coli | Ara, aTc | YFP | ~50 | ~60 min | [15] |

| AND | E. coli | Multiple TF | Fluorescence | ~40 | ~90 min | [16] |

| NAND | E. coli | PhlF, IcaR | Luciferase | ~30 | ~120 min | [16] |

| NOT | Plant | Auxin | Luciferase | ~40 | ~10 hours | [14] |

| AND | Plant | CK, Auxin | Luciferase | ~25 | ~12 hours | [14] |

Figure 1: Genetic NOR Gate Circuit. The circuit outputs YFP only when both Arabinose and aTc inputs are absent, implementing Boolean NOR logic [15].

Genetic Switches and Oscillators

Bistable Switches and Memory Systems

Genetic toggle switches represent a fundamental class of bistable systems that can maintain one of two stable states indefinitely, functioning as biological memory storage devices. These switches typically comprise two mutually repressing genes, creating a system that can be toggled between states by transient external signals. Once switched, the circuit maintains its state even after the inducing signal is removed, enabling permanent memory storage at the cellular level [15] [13].

The mathematical foundation for bistable switches builds upon the dynamics of mutually inhibitory genes. The system can be described by coupled differential equations representing the production and degradation of the two repressor proteins:

$$\begin{aligned} \frac{dp1}{dt} &= \alpha1 \cdot f{\text{NOT}}(p2) - \gamma1 p1 \ \frac{dp2}{dt} &= \alpha2 \cdot f{\text{NOT}}(p1) - \gamma2 p2 \end{aligned}$$

where $p1$ and $p2$ represent the concentrations of the two repressor proteins, $\alphai$ are their production rates, $\gammai$ are degradation rates, and $f_{\text{NOT}}$ represents the repression function [16]. Bistability occurs when specific parameter combinations yield two stable steady states separated by an unstable equilibrium.

Implementation of robust toggle switches requires careful balancing of kinetic parameters and consideration of cellular context. Key design challenges include minimizing metabolic burden, ensuring orthogonal components to prevent crosstalk with native systems, and maintaining stability across cell divisions. Successful implementations have been demonstrated in various host organisms including bacteria, yeast, and mammalian cells, with applications ranging from long-term cellular memory to lineage tracing in development [15].

Oscillatory Systems and Biological Clocks

Genetic oscillators generate periodic waveforms in protein concentrations, enabling biological timing functions comparable to electronic clocks. The repressilator, first synthesized by Elowitz and Leibler in 2000, represents a landmark achievement in synthetic biology—a three-gene ring network where each gene represses the next in the cycle [16]. This circular topology creates inherent time delays in transcription, translation, and degradation that can sustain oscillations under appropriate parameter conditions.

The dynamics of oscillatory systems are typically modeled using extended versions of the basic gene expression equations:

$$\begin{aligned} \dot{m}i &= \alphai fi(p{i-1}) - \gamma{mi} mi + \alpha{i,0} \ \dot{p}i &= \betai mi - \gamma{pi} pi \end{aligned}$$

where $mi$ and $pi$ represent mRNA and protein concentrations respectively for gene $i$, $\alphai$ and $\betai$ are production rates, $\gamma{mi}$ and $\gamma{pi}$ are degradation rates, $\alpha{i,0}$ represents basal expression, and $fi$ describes the repression function [16].

More recent oscillator designs have incorporated additional regulatory layers such as post-translational control, phosphorylation cascades, and intercellular signaling to enhance robustness and tunability. Applications include programmed drug delivery systems that release therapeutics at specific times, synthetic cell cycle controllers, and synchronization devices for coordinating population-level behaviors [13] [16].

Table 2: Genetic Switch and Oscillator Performance Parameters

| Circuit Type | Host Organism | Number of Components | Switching Time | Stability | Applications |

|---|---|---|---|---|---|

| Toggle Switch | E. coli | 2 repressors, 2 promoters | 2-3 hours | >50 generations | Cellular memory, decision making |

| Repressilator | E. coli | 3 repressors, 3 promoters | 2-4 hour period | ~10 cycles | Gene expression timing, rhythm generation |

| Dual-feedback Oscillator | E. coli | 2 repressors, activators | 1-3 hour period | >40 cycles | Programmable drug delivery |

| CRISPRi Switch | Mammalian cells | 1 dCas9, sgRNA | 12-24 hours | >2 weeks | Gene therapy, cell state control |

Figure 2: Repressilator Oscillator Circuit. A three-gene ring network where each gene represses the next, creating sustained oscillations in protein concentrations [16].

Experimental Methodology and Characterization

Quantitative Characterization Framework

Robust quantitative characterization represents a critical requirement for predictable genetic circuit design. In plant systems, researchers have established a rapid (~10 days), reproducible framework based on the concept of relative promoter units (RPUs) to normalize measurements across experimental batches [14]. This approach selects a reference promoter (e.g., the 200-bp 35S promoter) and defines its activity as 1 RPU within each protoplast batch, converting raw measurements to standardized units that enable comparative analysis across setups.

The experimental pipeline typically incorporates a normalization module featuring a constitutively expressed reference protein (e.g., β-glucuronidase, GUS) alongside the circuit output reporter (e.g., firefly luciferase, LUC). The LUC/GUS ratio provides normalized values that significantly reduce variation. For a genetic circuit with output $O$ and reference signal $R$, the RPU value is calculated as:

$$\text{RPU} = \frac{O{\text{sample}} / R{\text{sample}}}{O{\text{reference}} / R{\text{reference}}}$$

This normalization strategy has demonstrated substantial improvement in measurement reproducibility, enabling predictive circuit design with high accuracy (R² = 0.81 for plant circuits) [14].

Modular Part Design and Engineering

Expanding the repertoire of well-characterized biological parts is essential for sophisticated circuit construction. Modular synthetic promoters represent a key advancement, enabling predictable integration of regulatory functions. A common design approach uses a strong constitutive promoter (e.g., the 200-bp 35S promoter in plants) as a backbone, with specific operator sequences inserted at strategic positions to create repressible promoters [14].

Design optimization involves systematic testing of operator positions to maximize dynamic range while maintaining sufficient basal expression. Research has shown that placing operators between CAAT boxes and the transcription start site typically achieves improved fold-repression [14]. A library of synthetic promoter-repressor pairs using TetR family repressors (LmrA, PhlF, IcaR, BM3R1, SrpR, and BetI) demonstrated fold-repression ranging from 4.3 (IcaR) to 847 (PhlF), with high orthogonality minimizing crosstalk between components [14].

Table 3: Research Reagent Solutions for Genetic Circuit Implementation

| Reagent/Category | Specific Examples | Function/Application | Key Characteristics |

|---|---|---|---|

| Repressor Proteins | TetR, PhlF, IcaR, LmrA, BM3R1 | Transcriptional regulation of synthetic circuits | Orthogonal, high dynamic range, minimal crosstalk |

| Synthetic Promoters | 35S-derived promoters with operator inserts | Context-dependent gene expression control | Modular, tunable strength, inducible/repressible |

| Reporter Systems | YFP, Luciferase, GUS | Quantitative circuit output measurement | High sensitivity, broad dynamic range, minimal toxicity |

| Sensor Systems | Auxin sensor (GH3.3), cytokinin sensor (TCSn) | Detection of small molecule inputs | High sensitivity, specific ligand recognition |

| DNA Assembly Systems | Golden Gate, Gibson Assembly | Physical construction of genetic circuits | High efficiency, modular, standardized |

Network Analysis Approaches for Circuit Design

Network Visualization and Analysis

As genetic circuits increase in complexity, network approaches provide powerful methods for structuring, analyzing, and visualizing design data. Converting circuit designs into network representations creates dynamic structures that can be interactively shaped into subnetworks based on specific requirements such as biological part hierarchy or molecular interactions [15]. This approach enables automatic scaling of abstraction levels, tailoring visualizations to particular analysis needs through coloring or clustering nodes based on types (e.g., genes, promoters, proteins).

A significant advantage of network representations is their ability to integrate diverse data types beyond genetic sequences alone, including circuit modularity, functional details, implementation instructions, dynamical predictions, and validation strategies [15]. Knowledge graphs—structured directed graphs where nodes and edges contain semantic labels—have proven particularly valuable for biological applications, enabling complex control over underlying data and arrangement into multiple abstraction layers.

Standardized data formats like Synthetic Biology Open Language (SBOL) facilitate this network-based approach by describing both structural (e.g., DNA sequences) and functional (e.g., regulation interactions) information [15]. The transformation of design files into networks follows a systematic process: initial conversion into an intermediate data structure compatible across formats, followed by application of graph theory methods to calculate shortest paths between entities, identify clusters, and find intersections within the design [15].

Design Principles for Biological Network Figures

Effective visualization of genetic circuits as networks requires careful consideration of design principles. First, determining the figure's purpose represents a critical initial step—whether to convey network functionality, structure, or specific interactions [18]. For functional relationships, data flow encodings with nodes connected by arrows effectively illustrate interaction cascades, while undirected edges better represent structural associations.

Alternative layouts beyond conventional node-link diagrams may enhance clarity for specific data types. Adjacency matrices excel with dense networks, enabling clear visualization of edge attributes through cell coloring and readable node labels without clutter [18]. For hierarchical circuits, fixed layouts or circular arrangements may provide more intuitive representations.

Visual encoding choices significantly impact interpretation. Quantitative color schemes (e.g., yellow to green gradations) effectively represent continuous values like expression variance, while divergent color schemes (e.g., red to blue) emphasize extreme values of differential expression [18]. Consistent application of these principles ensures network visualizations effectively communicate the intended story of the genetic circuit design.

Figure 3: Network Abstraction Hierarchy. Genetic circuit designs can be represented at multiple abstraction levels, from complete networks with all metadata to simplified input/output relationships [15].

Applications in Biomedical Engineering and Therapeutics

Genetic circuits offer transformative potential for biomedical applications, particularly in therapeutic development and delivery. In diagnostic applications, biosensing circuits programmed at transcriptional, translational, and post-translational levels can detect disease-specific biomarkers and trigger appropriate responses [12]. These systems enable identification of disease mechanisms and drug targets, screening for new therapeutics, and developing improved delivery methods.

Gene therapies represent a particularly promising application area, with circuits designed to correct disease-causing genetic mutations [12]. Research has demonstrated innovative approaches for conditions like Duchenne Muscular Dystrophy, where synthetic circuits could potentially restore normal function. Additionally, synthetic biology is expanding therapeutic platforms by creating biological devices that function as therapies themselves, moving beyond traditional small-molecule approaches [12].

Biocomputing represents another frontier, with researchers working toward complete biological computers through integration of control units (CU) with arithmetic/logic units (ALU) implemented via genetic circuits [16]. These systems adapt the fetch-decode-execute cycle of conventional computers to biological contexts, using genetic logic gates to process information and direct cellular operations. While still emerging, this approach could enable sophisticated decision-making capabilities within therapeutic cells.

The future of genetic circuits in biomedical engineering will likely focus on enhancing predictability across biological contexts, improving scalability, and developing standardized frameworks for clinical translation. As the field addresses these challenges, genetic circuits will play an increasingly important role in creating next-generation biomedical solutions.

The field of synthetic biomaterials represents a paradigm shift in biomedical engineering, merging the programmability of synthetic biology with the functional sophistication of materials science. This convergence has enabled the creation of intelligent, self-assembling systems that operate within biological environments to direct cellular behavior, deliver therapeutics, and regenerate tissues. These systems mark a significant evolution from static, inert biomaterials to dynamic, responsive interfaces that engage in reciprocal communication with biological systems [19] [20]. The foundational principle hinges on engineering biomolecules that spontaneously organize into predetermined nanostructures and macroscopic materials based on encoded information within their building blocks.

This technical guide examines the core principles, design methodologies, and applications of synthetic self-assembling biomaterials, framing them within the broader context of synthetic biology. For researchers and drug development professionals, mastering this interface offers unprecedented control over biological interactions, paving the way for next-generation diagnostic and therapeutic platforms. The materials discussed herein are characterized by their bioactivity, adaptability, and often, their biomimetic nature, drawing inspiration from the self-assembling structures ubiquitous in biology, such as the extracellular matrix, bacterial pili, and viral capsids [20] [21].

Fundamental Principles of Molecular Self-Assembly

Self-assembly in biological and synthetic contexts is governed by the spontaneous organization of molecular components into stable, ordered structures through non-covalent interactions. The engineering of these systems requires a deep understanding of the forces and rules that dictate this process.

Key Driving Forces and Molecular Interactions

The stability and structural fidelity of self-assembled biomaterials arise from a delicate balance of several weak, non-covalent interactions. The table below summarizes these fundamental forces.

Table 1: Fundamental Molecular Interactions in Biomaterial Self-Assembly

| Interaction Type | Energy Range (kJ/mol) | Role in Self-Assembly | Examples in Biomaterials |

|---|---|---|---|

| Hydrophobic Effect | <5 to >50 | Drives amphiphilic molecules to sequester hydrophobic domains away from water, a primary driver for micelle, vesicle, and fibril formation. | Peptide amphiphiles, phospholipid bilayers [21]. |

| Electrostatic Interactions | 5-250 | Occurs between charged amino acid side chains (e.g., Lys/Arg vs Asp/Glu); enables responsiveness to pH and ionic strength. | Self-complementary ionic peptides (e.g., EAK16, RADA16) [20] [21]. |

| Hydrogen Bonding | 4-60 | Creates directional bonds between carbonyl and amide groups, critical for forming secondary structures (β-sheets, α-helices) and fibrillar networks. | β-sheet fibrils in peptide hydrogels [21]. |

| π-π Stacking | 0-50 | Contributes to the self-assembly of aromatic residues (e.g., F, F, W); often works cooperatively with other forces. | Diphenylalanine (FF) peptide nanotubes [21]. |

| Van der Waals Forces | 0.5-5 | Weak, non-specific attractions between electron clouds of adjacent atoms; contribute to packing within assemblies. | Molecular packing in crystalline domains of materials. |

Biomimicry as a Design Framework

A powerful strategy in designing self-assembling systems is to emulate structures and processes found in nature. Natural biomolecular systems, such as the extracellular matrix (ECM), provide a blueprint for creating synthetic materials that can effectively interact with biology [22]. The native ECM is a highly dynamic and complex network that provides not only structural support but also biochemical signals to cells. Modern biomaterial design seeks to replicate this multifunctionality. Key biomimetic approaches include:

- Architectural Mimicry: Designing peptides that form fibrillar networks resembling collagen or fibronectin matrices to support cell adhesion and growth [20] [22].

- Sequential Mimicry: Incorporating short peptide sequences (e.g., RGD for cell adhesion) from native ECM proteins into synthetic polymers to confer specific bioactivity [22].

- Dynamic Mimicry: Engineering materials that can sense and respond to environmental cues (e.g., enzymes, pH, light) much like living tissues, using mechanisms such as reversible cross-linking or programmable degradation [20].

Engineering Strategies for Synthetic Biomaterials

The creation of advanced biomaterials leverages a diverse toolkit from both synthetic biology and materials science, enabling precise control from the molecular to the macroscopic scale.

Genetic Engineering of Cellular Systems

Synthetic biology provides the tools to reprogram living cells as production factories for protein-based biomaterials. This involves engineering genetic circuits to control protein expression, folding, and even secretion.

Figure 1: Genetic circuit for biomaterial production.

Cells can be engineered to perceive specific inputs (biological, chemical, or physical) using synthetic receptors and optogenetic tools [20]. This information is processed by synthetic gene networks—ranging from simple Boolean logic gates to complex CRISPR-based recording systems—which then trigger the expression of output proteins. These outputs can be structural proteins (e.g., engineered silk, elastin) or enzymes that catalyze the formation of biomaterials [20] [8]. This approach allows for the production of complex, multifunctional materials directly from engineered cellular systems.

Peptide and Polymer-Based Self-Assembly

A bottom-up approach involves the de novo design and synthesis of self-assembling peptides and synthetic polymers. This field has expanded significantly, exploring chemical and sequence space beyond that used by biology to create novel functional materials [21].

- Self-Complementary Peptides: These peptides are designed with alternating polar and non-polar amino acids to form stable β-sheet membranes in salt solutions [21]. A classic example is the RADA16-I peptide (Ac-(RADA)4-CONH2), which forms a nanofibrous hydrogel that supports cell attachment and proliferation.

- Peptide Amphiphiles: Molecules consisting of a hydrophobic alkyl tail covalently linked to a hydrophilic peptide head. In water, they self-assemble into cylindrical nanofibers, with the peptide segments displayed on the surface to present bioactive epitopes [21].

- Stimuli-Responsive Peptides: These are engineered to undergo self-assembly in response to specific environmental triggers, such as a change in pH, the presence of a specific enzyme, or light exposure [20]. This allows for spatiotemporally controlled material formation in situ.

Experimental Protocols and Characterization

Robust experimental methodologies are essential for the design, fabrication, and validation of self-assembling biomaterials.

Protocol: Fabrication of a β-Sheet Peptide Hydrogel

This protocol outlines the standard procedure for creating a self-supporting hydrogel from a β-sheet forming peptide, such as RADA16-I [21].

- Peptide Solution Preparation: Dissolve the purified peptide in deionized water at a concentration of 1% (w/v). The solution will have a low viscosity and acidic pH.

- Sonication: Sonicate the solution for 10-30 minutes to break up any pre-existing aggregates and ensure a monomeric or oligomeric starting state.

- Gelation Induction:

- Ionic Trigger: Add a calculated volume of phosphate-buffered saline (PBS) or cell culture medium to the peptide solution to achieve a final salt concentration of ~150 mM. Gently pipette to mix. The gel will form within seconds to minutes.

- pH Trigger: Alternatively, gently add an equimolar amount of NaOH or buffer to neutralize the acidic peptide solution.

- Incubation: Allow the gel to incubate at room temperature or 37°C for 1 hour to complete the assembly process and stabilize the hydrogel network.

- Characterization:

- Microscopy: Analyze the gel's nanofibrous morphology using Scanning Electron Microscopy (SEM) or Atomic Force Microscopy (AFM).

- Spectroscopy: Confirm β-sheet formation using Fourier-Transform Infrared Spectroscopy (FTIR), specifically monitoring the amide I band for a shift to ~1630 cm⁻¹.

- Rheology: Perform oscillatory rheology to measure the mechanical properties (storage modulus G' and loss modulus G") of the hydrogel.

Quantitative Analysis of Biomaterial Properties

The in vivo performance of a biomaterial is dictated by its physico-chemical properties. The following table synthesizes quantitative data linking specific material characteristics to the biological outcome of bone formation, as identified in an empirical model [23].

Table 2: Empirical Model Linking Biomaterial Properties to Intra-Oral Bone Formation

| Biomaterial Property | Measurement Technique | Optimal Range for Bone Formation | Impact on Biological Response |

|---|---|---|---|

| Surface Roughness | Atomic Force Microscopy (AFM) | Higher roughness (Sa > 1.2 µm) | Significantly enhances osteointegration and bone deposition compared to smooth surfaces [23]. |

| Chemical Composition | X-ray Diffraction (XRD) | Hydroxyapatite (HAp) & Biphasic Calcium Phosphate (BCP) | HAp promotes osteoconductivity; BCP (HAp/β-TCP) offers tunable degradation and bioactivity [23]. |

| Macroporosity | Micro-Computed Tomography (μCT) | Pore diameter > 100 µm, Interconnected | Critical for facilitating osteogenesis and angiogenesis by allowing cell migration and nutrient diffusion [23]. |

| Microporosity | Mercury Porosimetry | Pore diameter < 10 µm | Increases specific surface area, enhancing protein adsorption and influencing early inflammatory response [23]. |

Applications in Therapeutics and Diagnostics

The programmability and dynamic nature of synthetic biomaterials unlock a wide array of applications in biomedicine, particularly as therapeutic and imaging agents [19].

Therapeutic Agents